St*AR*ybook

Interactive Space Project

Adam Omar

KU_CDE505

KU_CDE505

Unlike the Data Visualization Project, I didn't have a set plan for my Interactive Space. When Professor Miller first mentioned the project, I wanted to do something that combine an Arduino and AR, however the ideas I came up with weren't that thought out.

Essentially, this would be a website that allows users to interact with the data pulled from critic sites to make their own comparisons. Such as comparing particular years/months, presenting in-depth data on the particular year of film, viewing an up-to-date timeline of movie quality, etc.

Inspiration: https://galaxy-of-covers.interactivethings.io/ )

With this idea, I thought of making a physical device that used an Arduino to interact with a projection mapped visual. The device would be a stylized version of a film reel projector and the user could mount 2 reel-like cartridges to compare years in cinema. The user would also cycle through information using a crank on the side of the device, similar to a wound-up camera.

(Inspiration: https://play.date/ )

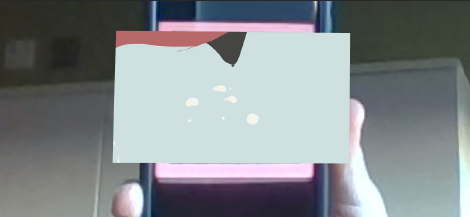

This would be a web-mounted AR program that displays an animated scene in AR space when the user views a DVD or Blu-ray cover of a movie through their phone's camera. The user could also interact with the AR Scene which would show information about the movie, such as reviews, rating, actors and etc.

Between the three ideas, I gravitated towards the third ideas, however with the decrease in customer purchasing physical copies of movies, Professor Miller did not feel the idea fit the project's scale. With some time to think about, I pushed off the project and focused on my Data Viz. Yet, through working on the Data Viz, I realized the AR idea had promise, but its implementation needed to be shifted. And with most of my grad projects revolving around storytelling, I decided to adapt the idea with that premise in mind; finalizing my project as an interactive storybook, where the user interacts with animated pages of a book through a web-mounted AR program on their phone, tablet, or PC.

After getting approval from Professor Miller, I went down the rabbit hole of Web AR. I first looked into the main companies recommended in the Interactive Spaces PowerPoint: 8thWal, Zappar, Adobe Aero, and Brio.

8thwall looked the most promising because several grad students used their service in the past through an educational license. However, when I reached out to them, the 8thwall representative said this license no longer exists and I would have to pay the standard subscription fee to use their services. So that was a major bust. I then looked to other providers, such as Zappar, Brio and Adobe Aero, however I found these providers lacked the tools and features I needed for my projects vision. At this point, I was starting to get worried that I wouldn’t be able to find an web-based AR tool without having to dish out a lot of money, however I then found AR.js!

I probably wouldn’t have wasted all that time scouring the internet if I remembered this library was on Prof. Miller's Interactive Spaces slide, but regardless, AR.js is an lightweight javascript library that allows web-based AR! It can track a 3D model by either using image recognition, location-based recognition, or marker recognition! And it includes a trigger system which can allow the user to interact in the 3D environment. I think the best feature is that it’s all housed on the web, so any user can access it with needing to install an app.

With my library found, I wrote out a todo list which highlighted the core features I wanted to implement in my prototype.

The AR Scene is anchored to a position in 3d Space

Have an animated AR Scene

The AR Program recognizes different pages, and can select different AR Scenes to load accordingly

Have the AR Scenes be multiple flat animated layers popped out from the book, to give a papery, pop-up aesthetic

Have the program recognize user actions, such as a screen press or swipe, and do some action

With a new library to figure out, I went straight to the documentation. In it, there are several demos showing off the library's features, however the documentation doesn't go in-depth about the functions available, so I had to resort to looking through project examples over on stackoverflow.

The first feature I needed to implement was how the system would be tracking the AR scenes. AR.js provides three different flavors for anchoring your 3D scene in AR: image recognition, which will display your scene if the camera recognizing a specific image; geolocation, where the user has to be in a particular location for the scene to be revealed; and marker recognition, which is the same as image recognition, except simplified to use markers. Between the three, I pursued the marker method because I believe it would have a smoother time recognizing a custom marker rather than an entire page of a storybook. However in hindsight, the image recognition is probably more suitable for a market demo. Regardless, l looked into a bunch of stackoverflow posts and was able to make a cube appear on the default “hiro” marker!

Second was figuring out how to animate the scenes. My goal is to have several looping videos which play out a page's scene. At first, I tested just playing an mp4 file when the default marker was detected. This worked, and when the "hiro" marker was detected, the ending credits of "Little Witch Academia" played (as well as its audio). This was good, but I want to layer videos over each other to create the popped up look. This requires finding a video format that allows transparency and can run on the web. Through some digging, I found the VP8/VP9 codec with a .webm wrapper fits both of those requirements. the way I set up my scene did not mesh well with the built-in video player, however transcoding a webm file is a bit convoluted.

You see, my original workflow for creating these animated scene was to first scan the pages, then isolate the objects in Photoshop, follow by importing them into After Effects, then animated them and export each layer into its own video file. Though Adobe no longer has support for webm files. At first, it looked like Adobe used to have support for the file format, but I couldn't find any sources that state the supported version. I then found an extension that claimed to at webm as an option in Adobe Media Encoder. However, when testing it, the files did not show up as transparent.

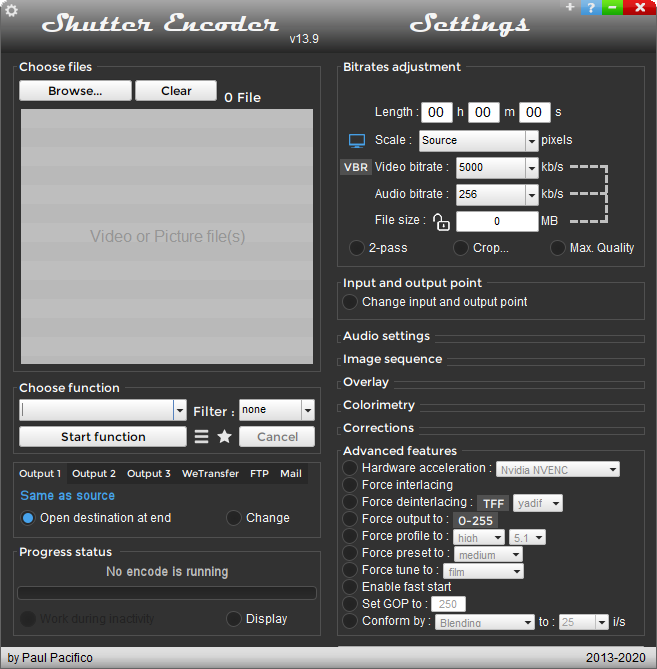

The solution I came up with was to export the video files into a Quicktime .mov file, explicitly calling the transcoder to include RGB+Alpha values. Then take those files and use another transcoder that has VP8 and webm support. In this case, I used Shutter Encoder.

Next was creating a custom marker for the system to track. This took a couple days to figure out because I learned there are two ways AR.js sets up its scenes, and each technique CANNOT be mixed (foreshadowing). After testing out a few demos and user-based examples, I found out that I was overcomplicating this part and just needed to learn how the "marker" function called custom patterns. I tested my custom page recognition with as marker I based on the symbol “Veles” which in Slavic mythology represents the “guardian of the Heavenly Gates that separates the spiritual world from the physical world.” I found the symbolism to be narratively fitting for an AR project, however I probably won't use this in the final version. Regardless, this test was able to display different content depending on the marker was shown, so that was a success!

Now in this first version, I tested these features as separate files. They all worked, however the real test was combining them. And that’s when the issues presented themselves.

When I create the custom marker system, I setup the scene where the camera acted as the origin point (meaning the camera would represent the x position being 0, the y position being 0, and z being 0). Whereas for the animated scene, the marker became the origin. So the simple solution would be just to which one of the systems to match the setup/origin of the other, however it can never be that simple when it comes to coding. The reason why these two features are using different setups is because that was the current way I could make them work.

Granted, I’m not saying this is the ONLY way they work, however I couldn’t find any documentation that displayed these features working on both setups. There definitely has to be a way to combine the two seamlessly, but that will take a day or two staring at a screen and scouring the internet for clues (just like the good ol’ days back in my C.S. undergrad~).

After presenting what I had in my PR meeting, Professor Miller and I discovered that the videos did not load on iOS devices (iPhones, iPad, Macs, etc…). I did some research and found that this is caused by iOS devices using Safari for their browser (and the other options like Chrome, Opera and Firefox are actually shells of the browser). And Safari is very restrictive when it comes to videos, requiring a user's input in order to play a video. So the solution is simple, just add a "eventhandler" that tracks any user input to load the video for iOS, right?

I tried writing a simple javascript that tracked user input, but the video still didn't play on iOS. Usually at this point, I would go on the documentation site and stackoverflow to find demos or a solution, but there was nothing! All the searches I found led to people asking about this issue, which would lead to one of three GitHub Issue chains of people complaining about this issue. And the few solutions posed in these chains were out-of-date or just didn't work.

I was at a loss, because 1 day of research turned to 2 days, then to 5 days, then a week and then 2 weeks with no progress on fixing the iOS issue. It was frustrating, but with the amount of time I have, I couldn't dwell on it any further. And on the plus side, I found a few tidbits through my search that helped refine my code and fix some issues that existed with the previous version. So with the code at a presentable state, it was time to add the story.

Originally, I was going to use my children's book I created for my first spring Grad Studio (516) course. Though throughout make the code, I then started leaning towards using Eric Carle's "The Very Hungry Caterpillar" as the prototype book. However, when I reread the book, I could really come up with some interesting animations that could go along with the visuals (outside of the caterpillar wiggling). So I instead decided to use Dr. Seuss' "The Cat in the Hat" and adapt the first three-ish pages. Like I mentioned above, my workflow consisted of obtaining a digital copy of the book, breaking up the objects on each page into its own layer per Photoshop, then import them over to After Effects, animate the scenes that would have animation, and then go through the exporting process.

I also looked into incorporating sound effects and narration to each page, but like with the iOS issue, I had a hard time finding any information about playing multiple audio files as well as playing specific audio when a specific marker is shown. So sadly, I wasn't able to implement audio.

And just to be clear, this book is being used only for EDUCATIONAL PURPOSES. Just feel I need to explicitly state that.

With all that being said, I am proud to present my AR Storybook, which I'm referring to as St*AR*ybook hence forth! There are two links below: Camera and Patterns.

In addition, here is the video demonstrating the project in the event the program breaks:

“ – A-Frame.” A. Accessed November 3, 2021. https://aframe.io/docs/1.2.0/primitives/a-video.html.

“3D Visualization Software for AR & VR - Create, Publish, Share: Brio.” BRIOXR, July 28, 2021. https://experience.brioxr.com/.

“Adobe: Creative, Marketing and Document Management Solutions.” Accessed November 3, 2021. https://www.adobe.com/.

Alexander, Agustin. “Transparent Video (Alpha Channel)on A-Frame and Ar.js.” Medium. Medium, March 13, 2019. https://medium.com/@agusalexander8/transparent-video-alpha-channel-on-a-frame-and-ar-js-96a8705465ff.

Anthony BuddAnthony Budd 78111 gold badge77 silver badges1111 bronze badges, Piotr Adam MilewskiPiotr Adam Milewski 11.7k33 gold badges1919 silver badges4040 bronze badges, BelfieldBelfield 1, and Mohamed IsmailMohamed Ismail 14355 bronze badges. “Is It Possible to Use Custom Markers?” Stack Overflow, November 1, 1965. https://stackoverflow.com/questions/45820170/is-it-possible-to-use-custom-markers/45823557#45823557.

Ar.js examples. Accessed November 3, 2021. https://stemkoski.github.io/AR.js-examples/index.html.

Aryan, AryanAryan 731212 bronze badges, and Piotr Adam MilewskiPiotr Adam Milewski 11.7k33 gold badges1919 silver badges4040 bronze badges. “In AR.JS When Load Model ,Show a Loading Screen.” Stack Overflow, October 1, 1967. https://stackoverflow.com/questions/57051773/in-ar-js-when-load-model-show-a-loading-screen.

“Asset Management System – A-Frame.” A. Accessed November 3, 2021. https://aframe.io/docs/0.8.0/core/asset-management-system.html#sidebar.

emiliusvgs. “ARJS Tutorial - Image Tracking with A-Frame | 3D & Video.” YouTube. YouTube, May 21, 2020. https://www.youtube.com/watch?v=pJdNqBbScO8.

Etienne, Alexandra. “How to Create Your Own Marker ?” Medium. ARjs, July 11, 2017. https://medium.com/arjs/how-to-create-your-own-marker-44becbec1105.

Etienne, Jerome. Ar.js marker training. Accessed November 3, 2021. https://jeromeetienne.github.io/AR.js/three.js/examples/marker-training/examples/generator.html.

Etienne, Jerome. “Creating Augmented Reality with Ar.js and A-Frame – A-Frame.” A. Accessed November 3, 2021. https://aframe.io/blog/arjs/.

Gopinath KaliappanGopinath Kaliappan 4, Chamin WickramarathnaChamin Wickramarathna 1, and MarkMark 46311 gold badge66 silver badges1717 bronze badges. “How to Create Custom Marker in Ar.js?” Stack Overflow, February 1, 1966. https://stackoverflow.com/questions/47644935/how-to-create-custom-marker-in-ar-js.

gtk2k. “Gtk2k.Github.io/animation_gif at Master · gtk2k/gtk2k.Github.io.” GitHub. Accessed November 3, 2021. https://github.com/gtk2k/gtk2k.github.io/tree/master/animation_gif.

Jeromeetienne. “I Can Not View Videos ... · Issue #285 · Jeromeetienne/Ar.js.” GitHub. Accessed November 3, 2021. https://github.com/jeromeetienne/AR.js/issues/285.

“Marker Based.” Marker Based - AR.js Documentation. Accessed November 3, 2021. https://ar-js-org.github.io/AR.js-Docs/marker-based/.

Mayognaise. “Mayognaise/Aframe-Gif-Shader: A Shader to Display GIF for A-Frame VR.” GitHub. Accessed November 3, 2021. https://github.com/mayognaise/aframe-gif-shader.

“PlayCanvas - the Web-First Game Engine.” PlayCanvas.com. Accessed November 3, 2021. https://playcanvas.com/.

“Powerful Tools to Create Extraordinary Webar, AR, WebVR Experiences.” 8th Wall. Accessed November 3, 2021. https://www.8thwall.com/.

pravidpravid 6791010 silver badges2525 bronze badges, and Angelo Joseph SalvadorAngelo Joseph Salvador 34255 silver badges1818 bronze badges. “A-Frame AR.JS Marker Pattern Not Working.” Stack Overflow, January 1, 1966. https://stackoverflow.com/questions/47000523/a-frame-ar-js-marker-pattern-not-working.

“Rakugakiar.” Whatever Inc. Accessed November 3, 2021. https://whatever.co/work/rakugakiar/.

Rodrigocam. “RODRIGOCAM/AR-Gif: Easy to Use Augmented Reality Web Components.” GitHub. Accessed November 3, 2021. https://github.com/rodrigocam/ar-gif.

Schmelyun, Andrew. “Tips + Tricks to Spice up Your Ar.js Projects.” Medium. Medium, September 16, 2018. https://medium.com/@aschmelyun/tips-tricks-to-spice-up-your-ar-js-projects-fa89bc2ec296.

tgpfeiffertgpfeiffer 1, and Frédéric BussièreFrédéric Bussière 111 bronze badge. “How to Improve Marker Detection on Mobile Browser with Ar.js.” Stack Overflow, September 1, 1968. https://stackoverflow.com/questions/62322532/how-to-improve-marker-detection-on-mobile-browser-with-ar-js.

Tobias FandrichTobias Fandrich 2133 bronze badges, mattorpmattorp 3166 bronze badges, and Arman NadjariArman Nadjari 1. “Ar.js Can't Get Video or Picture Working with NFT Only 3D.” Stack Overflow, August 1, 1968. https://stackoverflow.com/questions/61773016/ar-js-cant-get-video-or-picture-working-with-nft-only-3d.

“UI and Custom Events.” UI and Events - AR.js Documentation. Accessed November 3, 2021. https://ar-js-org.github.io/AR.js-Docs/ui-events/.

“Veles Slavic Symbol - Worldwide Ancient Symbols.” Symbolikon, April 18, 2020. https://symbolikon.com/downloads/veles-slavic/.